Animation is coming to ShapesXR

Request early access!The future of media consumption in the Metaverse

Sponsered by

.png)

With lighter headsets on the horizon, the number of ways in which users might consume media in the future are endless! So for our latest contest, we asked ShapesXR users to come up with innovative prototypes for mixed reality applications that suggest new and exciting ways in which people might consume media in the metaverse.

We asked designers to consider how media consumption, in a mixed reality environment, would adapt to real world spaces, integrate social interaction, and enhance the overall experience for users.

On December 16th, five finalists presented their prototypes, LIVE from ShapesXR, to a panel of industry professionals from Netflix, Meta, and Google.

Audience members tuned in from around the world via YouTube live to watch each finalist take to the virtual stage to present their ShapesXR design for a mixed reality application that innovated a new and creative way for users to consume media in the future.

Each finalist had five minutes to present their project, and our three winners were announced at the conclusion of the event.

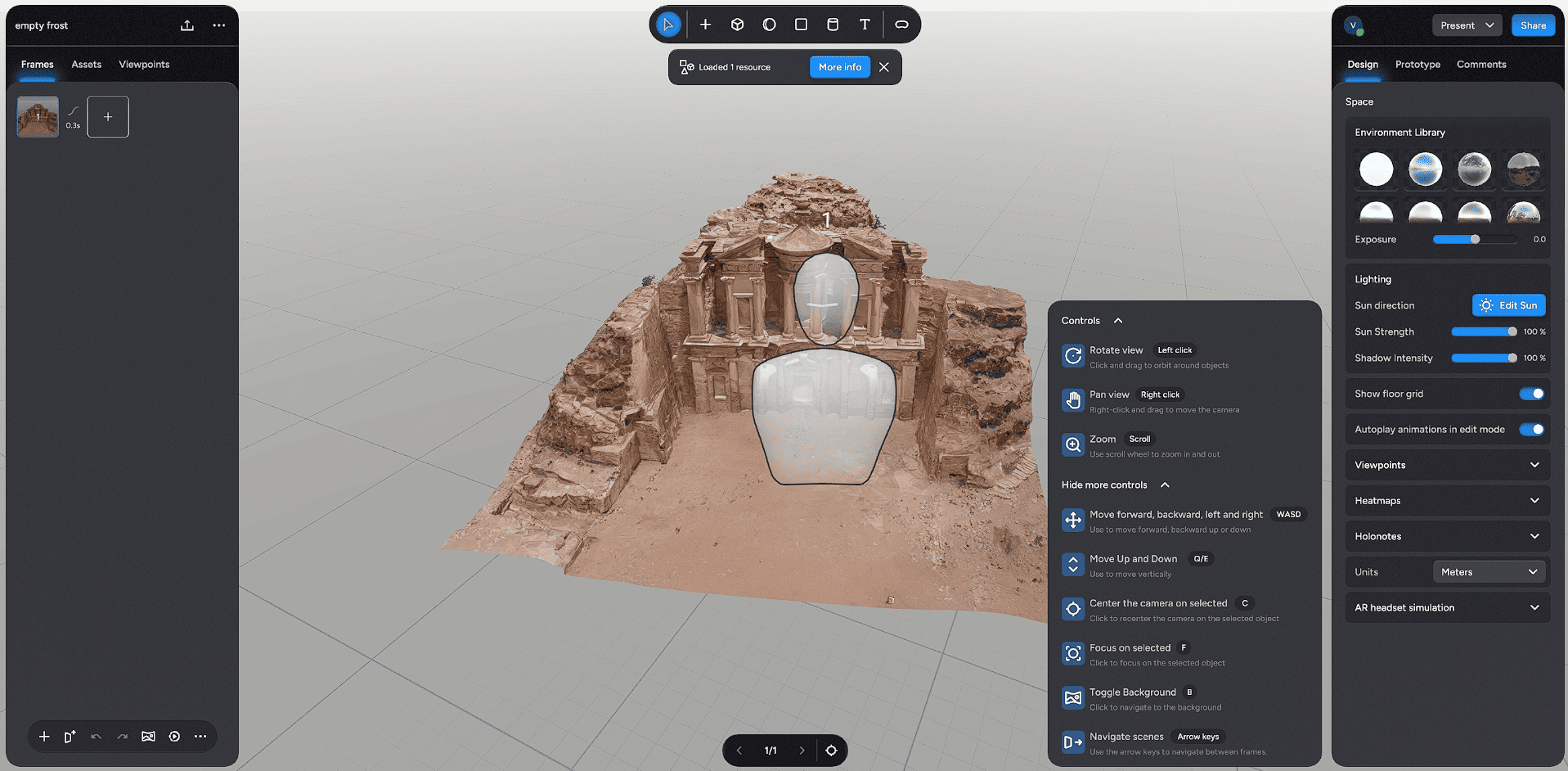

2025 was a a year of shipping at scale for ShapesXR: expanding the platform, maturing the product, strengthening infrastructure, and laying the foundation for the next generation of ShapesXR. Across VR and Web, we delivered major features our community had been asking for, while quietly hardening performance, security, and usability across the entire product. Here’s a look back at the milestones that shaped ShapesXR in 2025.

Together, these releases pushed ShapesXR into a new phase supporting more realistic visuals, richer prototyping, broader device coverage, and enterprise-ready infrastructure.

July marked one of the most anticipated releases in ShapesXR history: Animation

We have been hard at work and gathered countless feedback to ensure this game-changing feature feels familiar and easy to use while giving you the flexibility to turn your designs into stunning high-fidelity presentations and prototypes.

Creators can just set a start and end state, and all objects and UI elements in your scene will be animated seamlessly. This also includes their properties like size, shape and materials. You can fine‑tune motion using presets like ease‑in, ease‑out, and linear, adjust transition timing, and preview results instantly. Auto‑generated frame thumbnails make it easy to manage complex sequences. At the same time, designers can now animate and prototype fully in 3D directly from the browser, then seamlessly transition into VR.

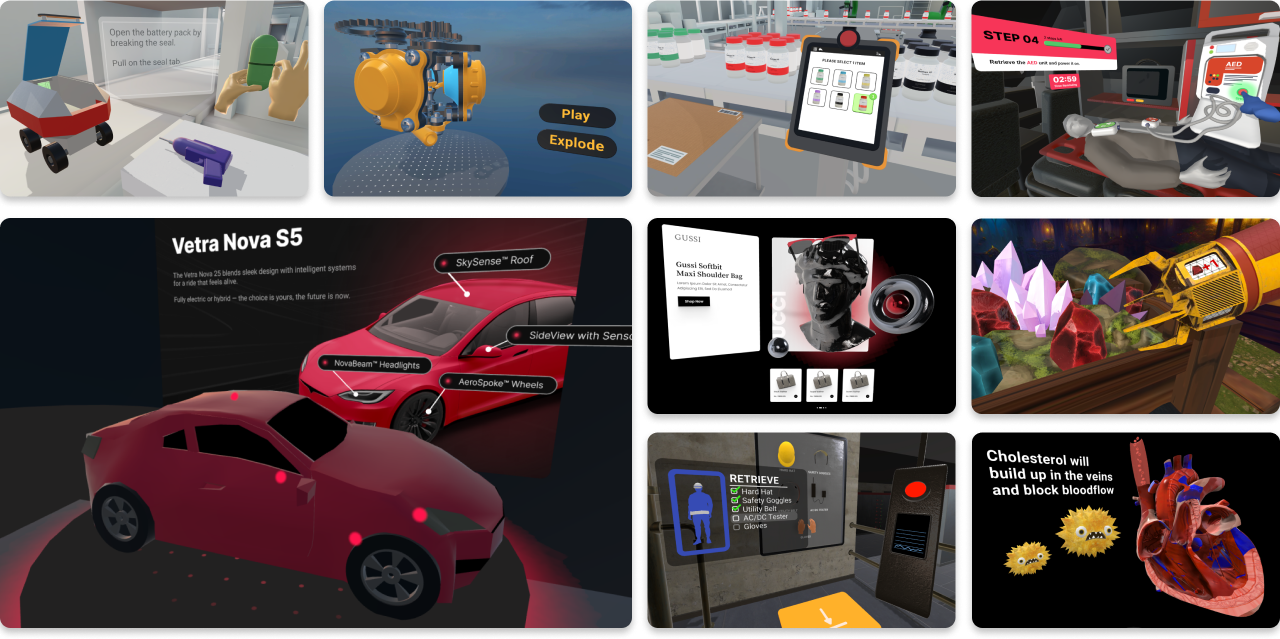

To showcase what’s possible with this new feature, we released new and updated sample spaces that demonstrate animated UI flows and training scenarios:

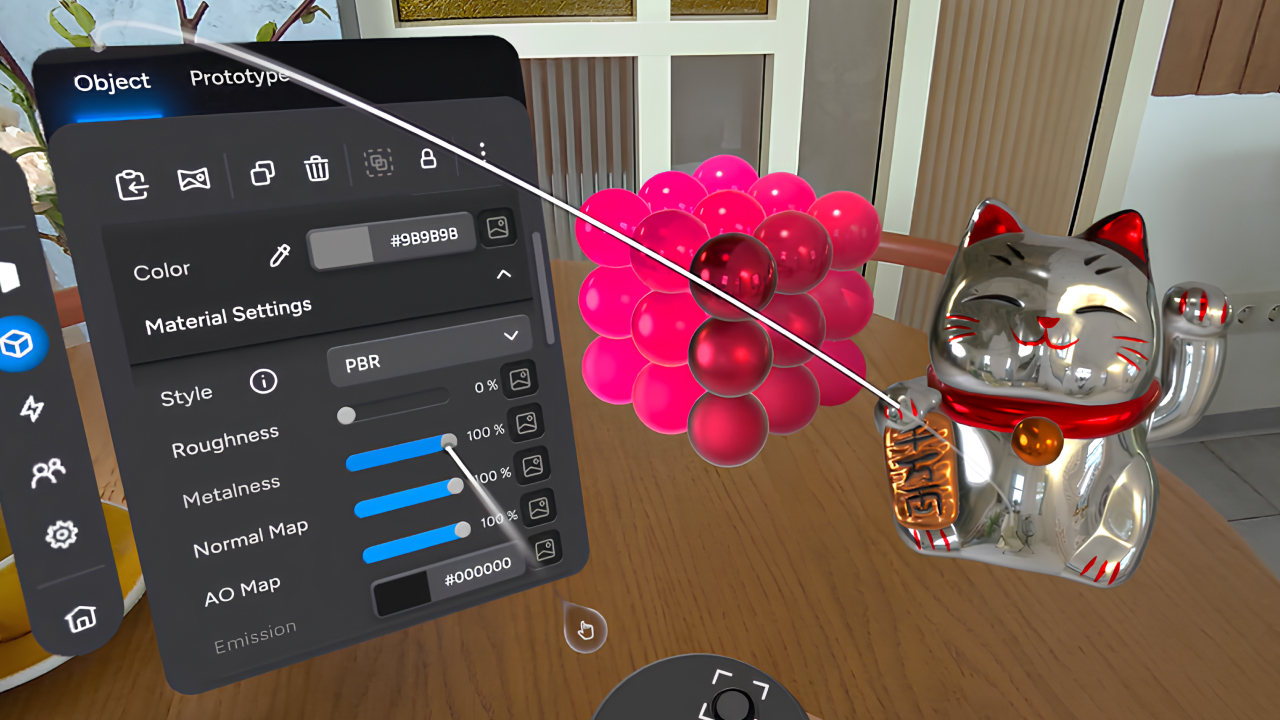

We introduced physically based rendering (PBR) materials with fine‑grained controls for roughness, metalness, normal maps, blend modes, and more. Reflective metals, realistic plastics, and higher‑fidelity imported assets brought a new level of realism to ShapesXR creations — both in VR and on the web.

To streamline the process, we have added an expansive library of built-in materials and textures that can be applied to any shape in the scene. This includes concrete, bricks, dirt, tiles and many more. Just choose a material from the library and instantly add realism to your scenes.

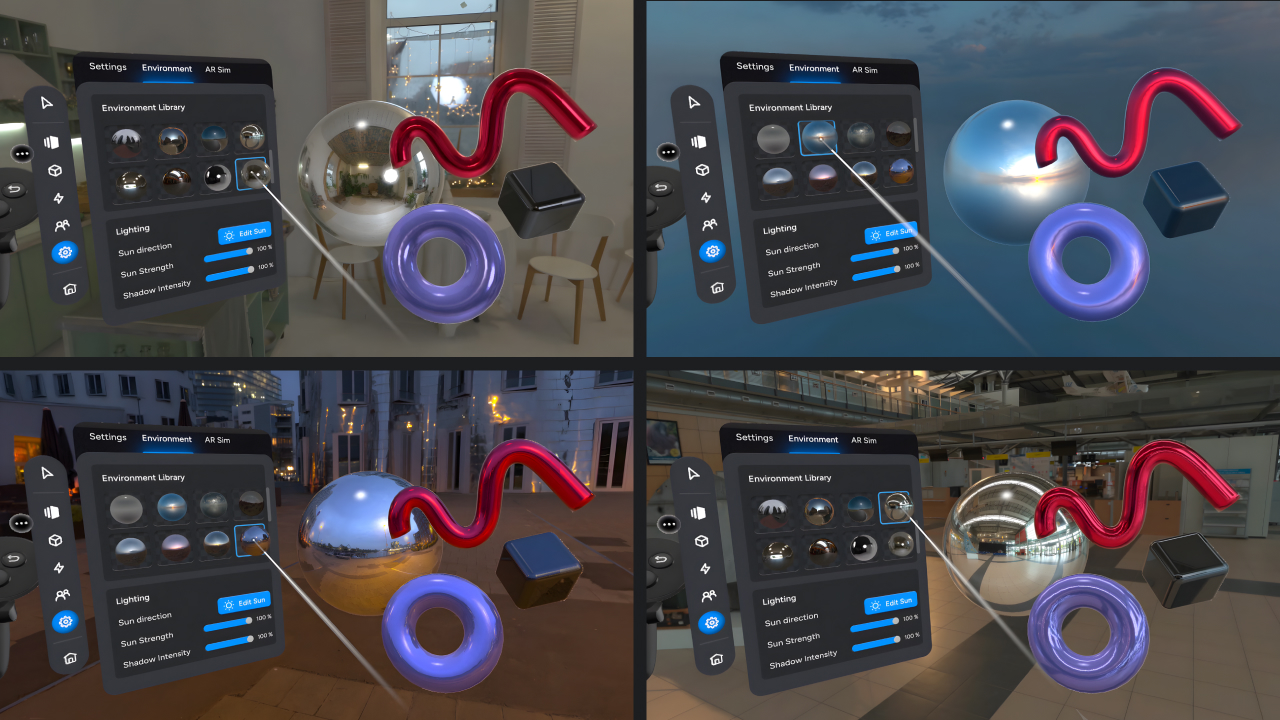

Skyboxes completed the visual upgrade by replacing the empty void with realistic environments that influence lighting and reflections. The result: scenes that feel grounded, contextual, and far more immersive.

In 2025, we fully delivered a new import and LOD system — a foundational upgrade that significantly improved performance, stability, and asset handling across ShapesXR. Large and complex assets now load more reliably, behave more predictably, and perform better in both VR and web environments.

Based on our internal testing, the system now supports models ranging from 500K up to 4–6 million polygons, loading them safely even if they appear simplified or show minor artifacts. Assets in the 120K–500K polygon range, which previously caused major slowdowns, now run consistently with little to no visible quality loss, while models under 120K polygons benefit from additional optimization without visual changes.

.jpg)

The long‑awaited Measuring Tool arrived with full support for both metric and imperial units. Acting like a laser pointer, it snaps to surfaces, aligns with the world grid, and helps evaluate scale, positioning, and ergonomics especially valuable when designing in Mixed Reality.

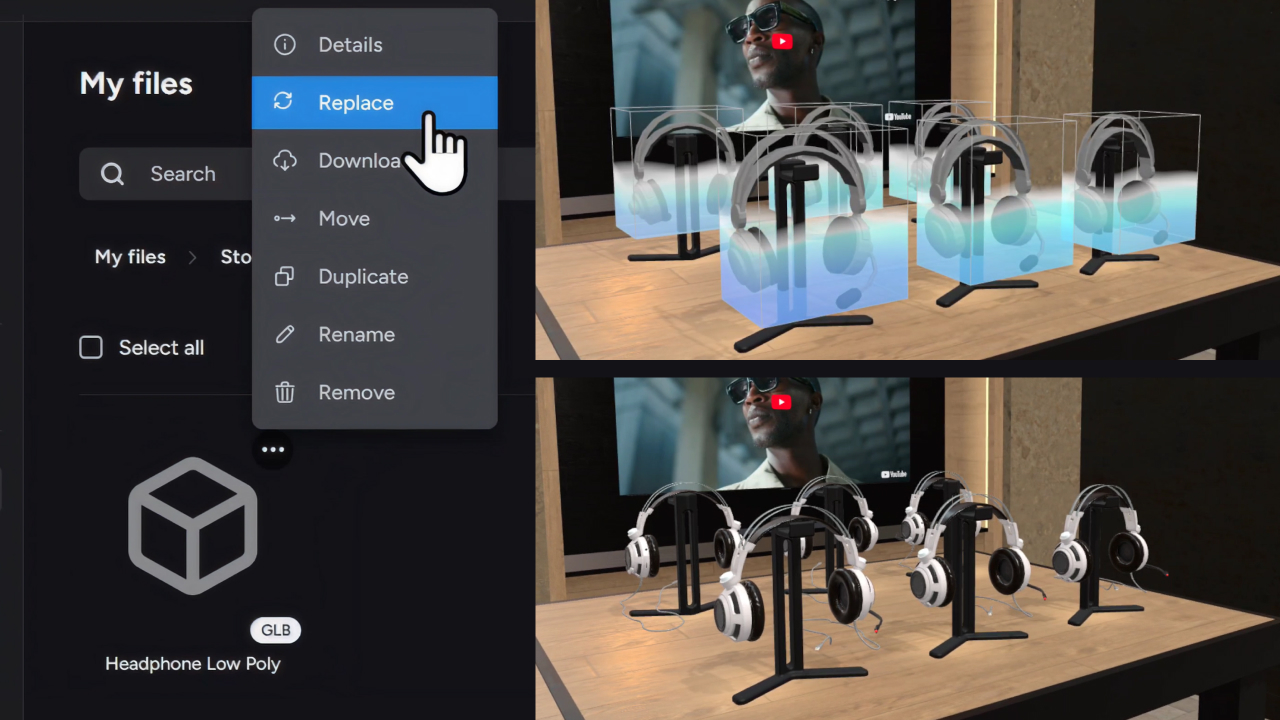

Creators can now import YouTube videos directly into ShapesXR. By pasting a link into the web dashboard, videos instantly become available as assets making it easier than ever to build presentations, storytelling experiences and plan stores, environments, or any space that needs a bit of real-world context.

Replacing a model in a scene is now just a couple of clicks. Select an asset, choose “replace,” and watch it sync instantly across VR and web.

2025 also marked ShapesXR’s expansion to a brand‑new platform: GalaxyXR

This release included full GalaxyXR device support and hand‑tracking integration. With GalaxyXR, ShapesXR now reaches even more creators and teams across the XR ecosystem.

In 2025, we delivered major improvements to the web editor and dashboard, including a comprehensive UI/UX overhaul and significant performance optimizations—especially for in-headset browsers. We resolved key loading issues that had impacted reliability and implemented GDPR-aligned dashboard behavior, making the overall experience faster, more stable, and compliant for teams working across devices.

Alongside these features, we polished the gizmo for smoother interaction, and improved shared spatial anchors for reliable in‑person collaboration.

We announced our brand-new product: Places

Places is a new product that extends our platform from XR design teams to those designing for the physical world. Places brings simplicity and realism to teams that need to plan spaces, arrange products, and visualize facilities with just a few clicks, all without VR expertise. With Places, you can make critical design decisions in context, supported by heatmaps and A/B testing. It’s a collaborative tool for teams to arrange products and equipment in real-time, immersed in a high-fidelity environment with hand-tracking and real physics.

In 2025, a growing set of case studies highlighted how ShapesXR is used in real-world education and enterprise contexts. Universities and colleges — including Bellevue College, Loughborough University, Conestoga College, and iEXCEL at the University of Nebraska Medical Center — used ShapesXR to teach immersive design, prototype medical and educational XR tools, and enable real-time collaboration with strong learning outcomes.

At the same time, enterprise teams demonstrated the speed and clarity of VR-native prototyping: NXR reduced XR training design cycles from weeks to days, and L+R partnered with Printemps New York to prototype and refine a digital wayfinding system for a flagship retail experience. Together, these stories show how ShapesXR supports faster iteration, clearer communication, and production-ready results across industries.

.png)

Diego is an AR & VR developer graduate student, electrical engineer, and an avionics technician.

Diego designed an application that suggested ways in which airline passengers could consume media during flight. Mixed reality tabletop games that you could play with other passengers, real-time map overlays that one could view while looking out the window, and 360 flight cameras accessible via users' headsets were just a few of Diego's exciting ideas.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Imtissal is an immersive designer and came up with an innovative way of incorporating mixed reality into the analog experience of reading a magazine. For her design she showed how an MR application could be used to dramatically enhance the experience of reading National Geographic. Imtissal incorporated sidebar overlays, audio cues, and even an interactive 3D model of the solar system into her design.

Michael is a senior XR designer and prototyper. Michael prototyped a social media, mixed reality application called Portals. Portals would allow users to interact with short form media content in highly immersive ways. Not only could users watch portal content in AR, they could create volumetric immersive content directly from their phones.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Andrew is a content creator, XR developer, digital strategist, and world builder at CineConcerts. Andrew's highly immersive design would transport audiences into virtual concert halls, where they’d be fully immersed in live orchestra performances of scores from popular movies, all while watching the films unfold on giant virtual screens.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.